Annotation format

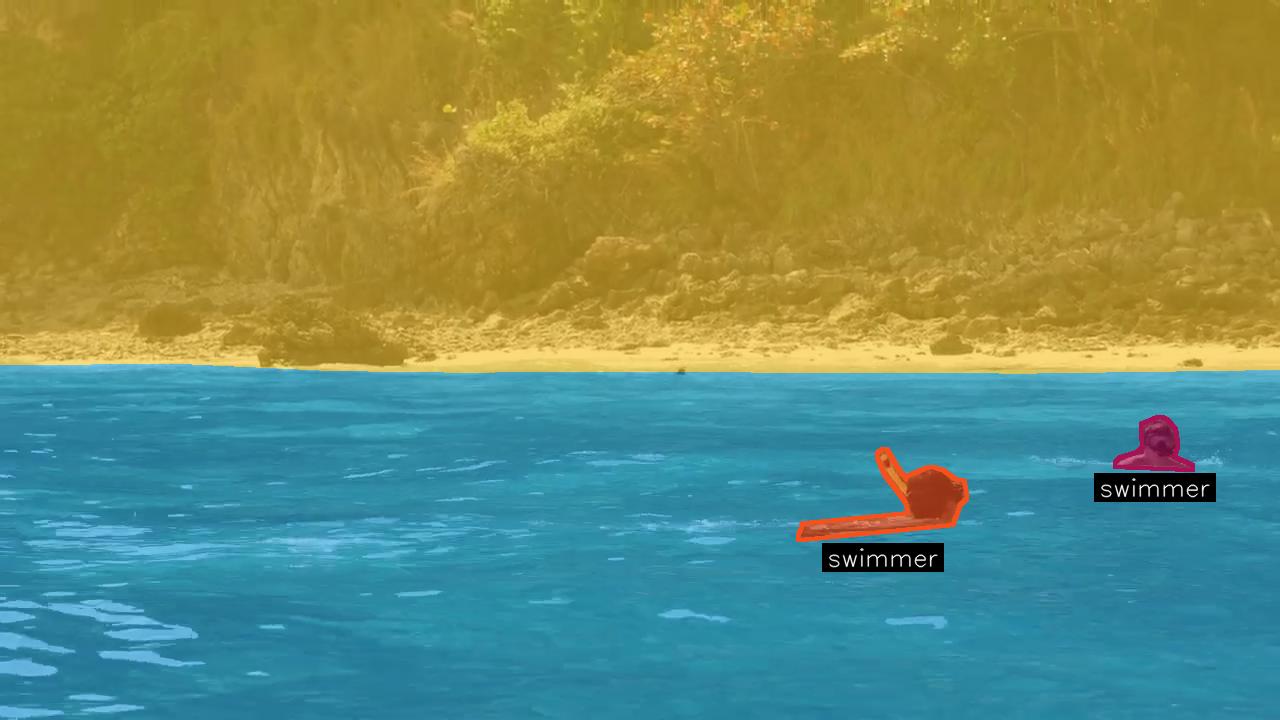

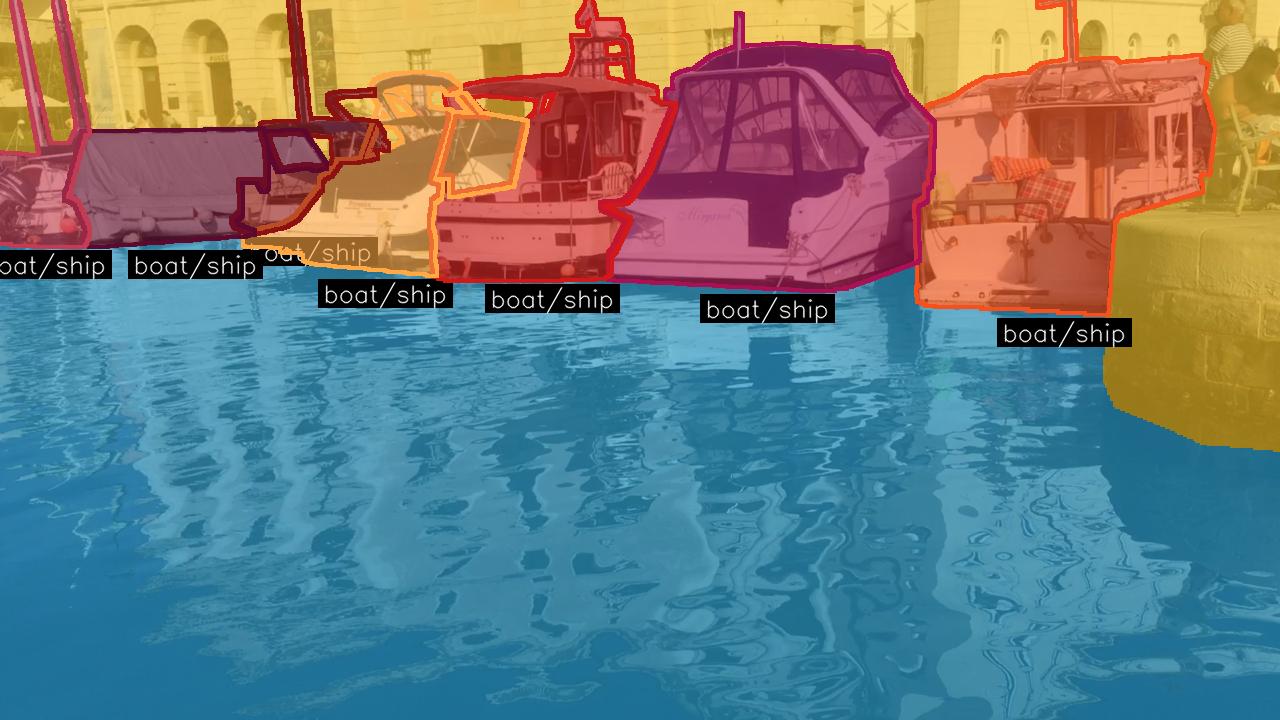

LaRS dataset comes with three types of annotations: panoptic, semantic and scene attributes.

Panoptic annotations are provided in COCO format and include the panoptic_annotations.json annotation file and masks in the panoptic_masks directory.

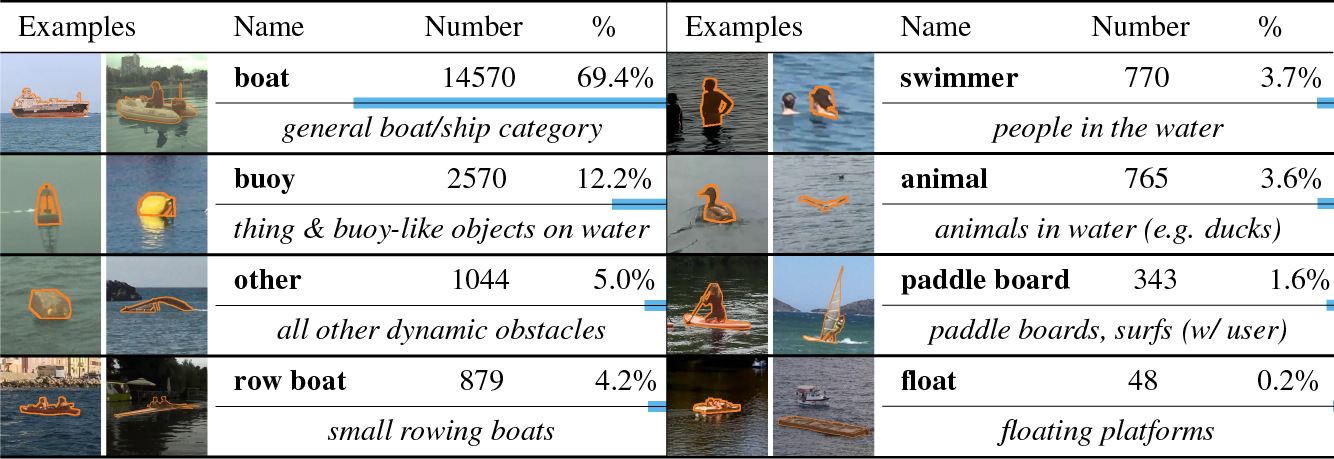

Categories and their IDs are defined in the categories field of the annotation file and are as follows:

| ID |

Name |

Type |

Supercategory |

| 1 |

Static Obstacle |

Stuff |

obstacle |

| 3 |

Water |

Stuff |

water |

| 5 |

Sky |

Stuff |

sky |

| 11 |

Boat/ship |

Thing |

obstacle |

| 12 |

Row boats |

Thing |

obstacle |

| 13 |

Paddle board |

Thing |

obstacle |

| 14 |

Buoy |

Thing |

obstacle |

| 15 |

Swimmer |

Thing |

obstacle |

| 16 |

Animal |

Thing |

obstacle |

| 17 |

Float |

Thing |

obstacle |

| 19 |

Other |

Thing |

obstacle |

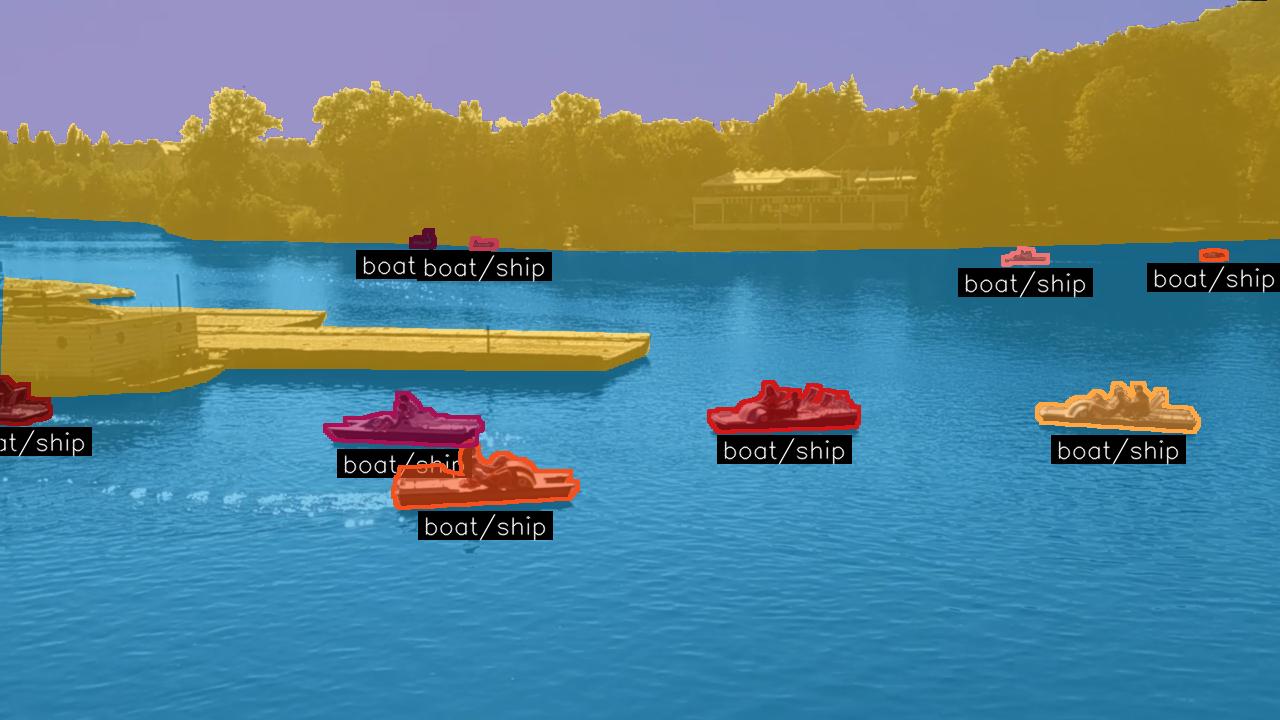

Semantic annotations are provided as PNG masks in the semantic_masks directory.

Semantic annotations are pixel-wise labels of 3 classes, obstacles, water and sky as defined by the supercategories of the panoptic categories. We use the following label IDs in the PNG files:

| ID |

Category |

| 0 |

Obstacles |

| 1 |

Water |

| 2 |

Sky |

| 255 |

Ignore |

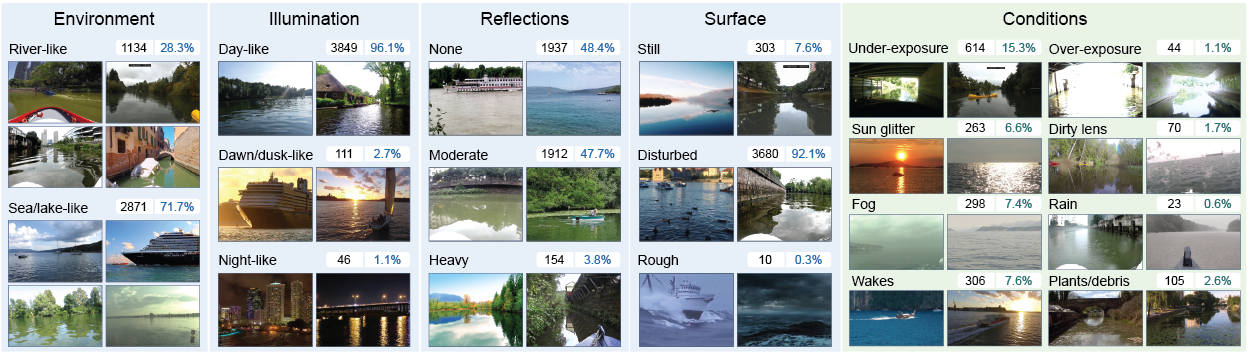

Scene attributes are stored in the image_annotations.json file.

BibTeX

If you use this dataset, please cite our work:

@InProceedings{Zust2023LaRS,

title={LaRS: A Diverse Panoptic Maritime Obstacle Detection Dataset and Benchmark},

author={{\v{Z}}ust, Lojze and Per{\v{s}}, Janez and Kristan, Matej},

booktitle={International Conference on Computer Vision (ICCV)},

year={2023}

}